Yiding Liu

刘亦丁

TikTok

Biography

I am currently a research engineer at TikTok, working on language model.

Prior to that, I was a research engineer at Baidu, working on information retrieval with Dr. Dawei Yin and Dr. Shuaiqiang Wang.

I received my Ph.D. degree in 2020 from Nanyang Technological University where I was advised by Prof. Cong Gao as a member of Data Management and Analytics Lab (DMAL).

Prior to NTU, I received my BEng. degree from University of Electronic Science and Technology of China (UESTC) in 2015, advised by Prof. Tao Zhou.

Recent Papers

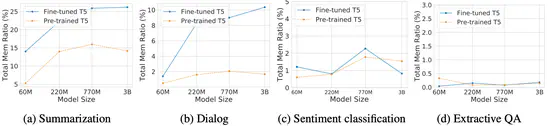

Exploring Memorization in Fine-tuned Language Models

We conduct the first comprehensive analysis to explore language models’ (LMs) memorization during fine-tuning across tasks. Our studies indicate that memorization presents a strong disparity among different finetuning tasks.

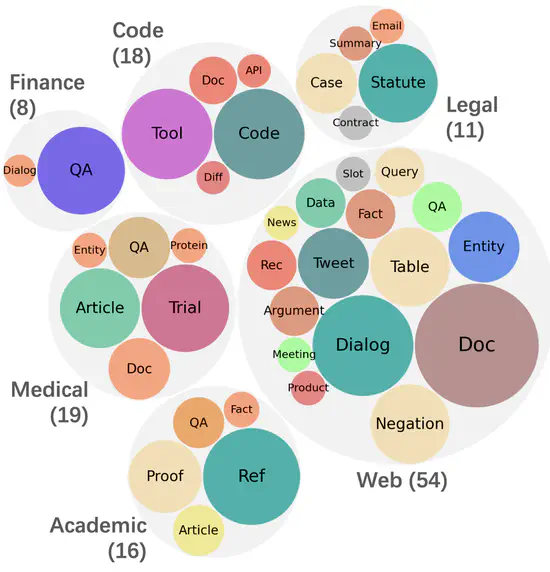

MAIR - A Massive Benchmark for Evaluating Instructed Retrieval

We propose MAIR (Massive Instructed Retrieval Benchmark), a heterogeneous IR benchmark that includes 126 distinct IR tasks across 6 domains, collected from existing datasets. We benchmark state-of-the-art instruction-tuned text embedding models and re-ranking models.

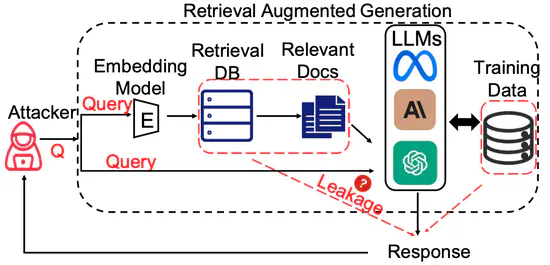

The Good and The Bad: Exploring Privacy Issues in Retrieval-Augmented Generation (RAG)

We conduct extensive empirical studies with novel attack methods, which demonstrate the vulnerability of RAG systems on leaking the private retrieval database. We further reveal that RAG can mitigate the leakage of the LLMs’ training data.

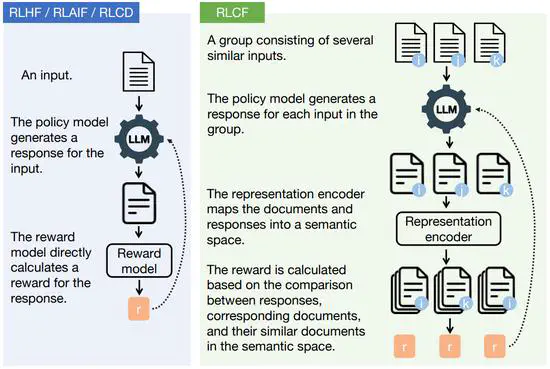

Aligning the Capabilities of Large Language Models with the Context of Information Retrieval via Contrastive Feedback

We propose an unsupervised alignment method, namely Reinforcement Learning from Contrastive Feedback (RLCF), empowering LLMs to generate both high-quality and context-specific responses.

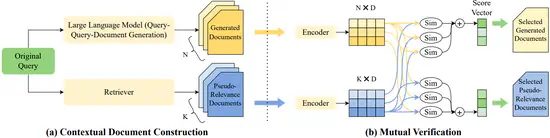

Mill: Mutual Verification with Large Language Models for Zero-shot Query Expansion

We propose a novel zero-shot query expansion framework utilizing both LLM-generated and retrieved documents.

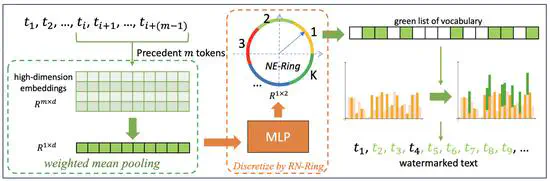

A Robust Semantics-based Watermark for Large Language Model against Paraphrasing

We propose a semantics-based watermark framework SemaMark. It leverages the semantics as an alternative to simple hashes of tokens since the paraphrase will likely preserve the semantic meaning of the sentences.

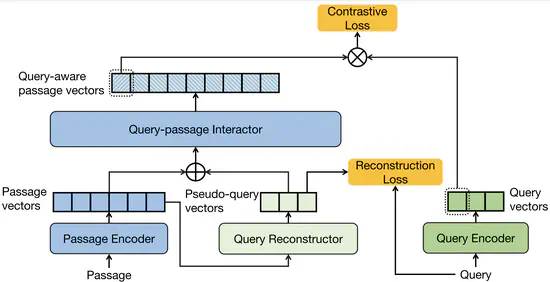

I3 Retriever: Incorporating Implicit Interaction in Pre-trained Language Models for Passage Retrieval

We incorporate implicit interaction into dual-encoders, and propose I^3 retriever. In particular, our implicit interaction paradigm leverages generated pseudo-queries to simulate query-passage interaction, which jointly optimizes with query and passage encoders in an end-to-end manner.

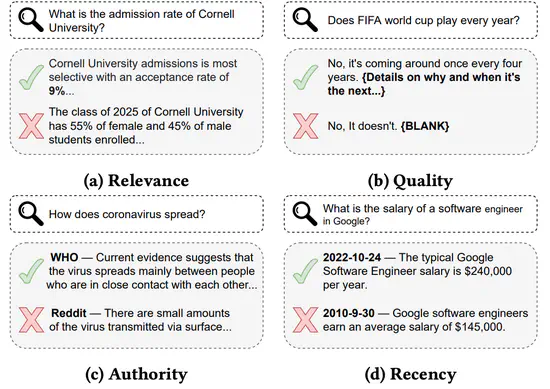

Pretrained Language Model based Web Search Ranking: From Relevance to Satisfaction

We focus on ranking user satisfaction rather than relevance in web search, and propose a PLM-based framework, namely SAT-Ranker, which comprehensively models different dimensions of user satisfaction in a unified manner.